Long-running Cloud Run functions

I’ve seen significant interested from a lot of people in using Cloud Run to run long running processes. Granted, there is a lot of appeal in simply building a container, pushing it to a fully hosted environment and leaving the rest to the fully managed service.

In this post, I’ll show you how you can achieve this goal with some sample code you can leverage to get started quickly.

But first, and before we dive into the details, there are several reasons why Cloud Run may not be the best choice for batch processes which you should consider carefully:

- Cloud Run is mainly meant for serving interactive web workloads and REST APIs

- In Cloud Run, all the work that needs to be done has to happen within the confines of a HTTP request (the function continues to run for a while after a request stops, but very very slowly — this can be disabled though through

--no-cpu-throttlingflag) - Cloud Run functions are generally meant to serve multiple requests concurrently from a single instance of the function (by default 80 requests per instance)

- Using external resources (databases, NFS mounts, etc) is more complex

- The default timeout for a request is 5 minutes (while it can be extended to 60 minutes, the HTTP connection to the function needs to stay open, which is subject to network conditions)

- Maximum amount of resources a function instance can have is 4 vCPUs and 16 GB of memory

- When invoked via Pub/Sub, the maximum time Pub/Sub will wait for acknowledgement is 10 minutes

- When invoked via Cloud Scheduler, the maximum attempt deadline is 30 minutes

There are also fine alternatives to running batch-like long-running workloads in Google Cloud Platform:

- Compute Engine instances: especially Spot VMs are a good way to run (restartable/idempotent) batch processes easily

- Kubernetes Jobs on GKE: Autopilot can take away a lot of the overhead of managing a GKE cluster

- Cloud Composer

- App Engine tasks

- Dataproc

- (Cloud Build)

- etc

Now, let’s ignore all the caveats and try to make a reasonably resilient Cloud Run function that executes for 45 minutes. To achieve that, we’ll try to solve the following:

- Allow execution of arbitrary commands without customization

- Keep the HTTP connection alive by streaming status via long-running request

We will ignore the recommendation of making an idempotent function (as recommended by Setting request timeout), since that cannot be achieved for arbitrary commands.

Building the test wrapper

The example wrapper is a simple Cloud Run function that receives a request from either curl or Cloud Pub/Sub and then executes a simple Bash script on response.

The bash script (which would be replaced by the command you want to run) is just a simple loop that keeps printing the date every 10 seconds and terminates after 45 minutes.

We’ll clone the repository and make a local test container (for example, in Cloud Shell):

git clone https://github.com/rosmo/long-cloud-run.git

cd long-cloud-run

export PROJECT=my-gcp-projectmake run ARGS="echo Hello World"

Let’s invoke the function from another shell window:

# curl -i http://localhost:8080

HTTP/1.1 200 OK

Date: Wed, 12 Jan 2022 16:06:27 GMT

Transfer-Encoding: chunkedRunning command: echo

Still waiting command to complete: echo

Hello World

Command completed: echo

We can now also build, push and deploy the function into Cloud Run:

# make deploy

Deploying container to Cloud Run service [long-cloud-run] in project [my-gcp-project] region [europe-west4]

✓ Deploying... Done.

✓ Creating Revision...

✓ Routing traffic...

Done.

Service [long-cloud-run] revision [long-cloud-run-00003-bom] has been deployed and is serving 100 percent of traffic.

Service URL: https://long-cloud-run-12345678-ez.a.run.appAnd then invoke the function via curl:

# curl -H "Authorization: Bearer $(gcloud auth print-identity-token)" https://long-cloud-run-12345678-ez.a.run.app

Running command: /long-running.sh

Thu Jan 13 11:01:47 UTC 2022 --- 0/270

[Still waiting for command to complete: /long-running.sh --- 5s]

Thu Jan 13 11:01:57 UTC 2022 --- 1/270

[Still waiting for command to complete: /long-running.sh --- 16s]

Thu Jan 13 11:02:07 UTC 2022 --- 2/270

[Still waiting for command to complete: /long-running.sh --- 29s]

Thu Jan 13 11:02:17 UTC 2022 --- 3/27

[wait 45 minutes...]

Thu Jan 13 11:46:29 UTC 2022 --- 268/270

Thu Jan 13 11:46:39 UTC 2022 --- 269/270

Thu Jan 13 11:46:49 UTC 2022 --- 270/270

Command completed in 45m12s: /long-running.shDeploying a real solution

Now let’s try this on a real solution, which is in this case GCS2BQ, a tool for exporting GCS metadata into BigQuery. First we’ll build the container from the repository:

export PROJECT=my-gcp-project

export BUCKET=my-gcs2bq-bucketmake push \

DOCKERFILE="Dockerfile.gcs2bq" \

IMAGE="gcs2bq" \

FUNCTION_NAME="gcs2bq"

We’ll need to set up a few cloud resources and IAM policies to make sure that GCS2BQ can access the necessary metadata:

# Create service account for GCS2BQ

gcloud iam service-accounts create gcs2bq --project=$PROJECT

export SA="gcs2bq@${PROJECT}.iam.gserviceaccount.com"# Create custom role for GCS2BQ

curl -Lso role.yaml https://github.com/GoogleCloudPlatform/professional-services/raw/main/tools/gcs2bq/gcs2bq-custom-role.yaml

gcloud iam roles create gcs2bq --project=$PROJECT --file=role.yaml# Add custom role for service account

gcloud projects add-iam-policy-binding $PROJECT \

--member="serviceAccount:$SA" \

--role="projects/$PROJECT/roles/gcs2bq"# Create bucket and BigQuery dataset for GCS2BQ

gsutil mb -p $PROJECT -c standard -l EU -b on "gs://$BUCKET"

gsutil iam ch "serviceAccount:$SA:admin" "gs://$BUCKET"bq mk --project_id=$PROJECT --location=EU gcs2bq

gcloud projects add-iam-policy-binding \

$PROJECT \

--member="serviceAccount:$SA" \

--role="roles/bigquery.jobUser"

gcloud projects add-iam-policy-binding \

$PROJECT \

--member="serviceAccount:$SA" \

--role="roles/bigquery.dataEditor"# Deploy function

make deploy \

DOCKERFILE="Dockerfile.gcs2bq" \

IMAGE="gcs2bq" \

FUNCTION_NAME="gcs2bq" \

DEPLOYARGS="--service-account=$SA --set-env-vars=GCS2BQ_PROJECT=$PROJECT,GCS2BQ_BUCKET=$BUCKET"

If all went well, we should have the function deployed and now we can call it:

$ curl -H "Authorization: Bearer $(gcloud auth print-identity-token)" https://gcs2bq-12345678-ez.a.run.app

Running command: /run.sh

Google Cloud Storage object metadata to BigQuery, version 0.1

I0113 11:45:07.537847 91 main.go:188] Performance settings: GOMAXPROCS=4, buffer size=1000

W0113 11:45:07.543867 91 main.go:197] Retrieving a list of all projects...

[output removed]

[Still waiting for command to complete: /run.sh --- 11s]

Waiting on bqjob_rf7e37495f772d08_0000017e5341af5e_1 ... (1s) Current status: DONE

Removing gs://sfans-gcs2bq-bucket-234fv/tmp.CLNCoe.avro...

/ [1 objects]

Operation completed over 1 objects.

Command completed in 19s: /run.shNow, we don’t want to manually trigger the function every time we want to update our GCS metadata dataset, so let’s set up the function to be triggered by a Pub/Sub message. Please note that Pub/Sub waits up to 10 minutes for a message acknowledgement, so that will cap the duration of the command to a maximum of 10 minutes:

# Set project number

export PROJECT_NUM=12345678# Create service account for Pub/Sub invoker

gcloud iam service-accounts create gcs2bq-invoker --project=$PROJECT

export INVOKER_SA="gcs2bq-invoker@${PROJECT}.iam.gserviceaccount.com"# Add permission to invoke the Cloud Run function

gcloud run services add-iam-policy-binding gcs2bq \

--member="serviceAccount:$INVOKER_SA" \

--role=roles/run.invoker \

--region=europe-west4 \

--project=$PROJECT# Add permission for Pub/Sub to create tokens for the invoker SA

export PS_SA="service-${PROJECT_NUM}@gcp-sa-pubsub.iam.gserviceaccount.com"gcloud iam service-accounts add-iam-policy-binding $INVOKERSA \

--member="serviceAccount:$PS_SA" \

--role=roles/iam.serviceAccountTokenCreator \

--project=$PROJECT# Create the Pub/Sub topic and subscription

SERVICEURL=$(gcloud run services describe gcs2bq --region=europe-west4 --format="value(status.address.url)" --project=$PROJECT)gcloud pubsub topics create gcs2bq --project=$PROJECT

gcloud pubsub subscriptions create gcs2bq-sub \

--topic gcs2bq \

--ack-deadline=600 \

--push-endpoint=$SERVICEURL \

--push-auth-service-account=$INVOKER_SA \

--project=$PROJECT# Push a test message to trigger the function

gcloud pubsub topics publish gcs2bq \

--message="{}" --project=$PROJECT

Now, look into Cloud Logging, and you should see the GCS2BQ tool running!

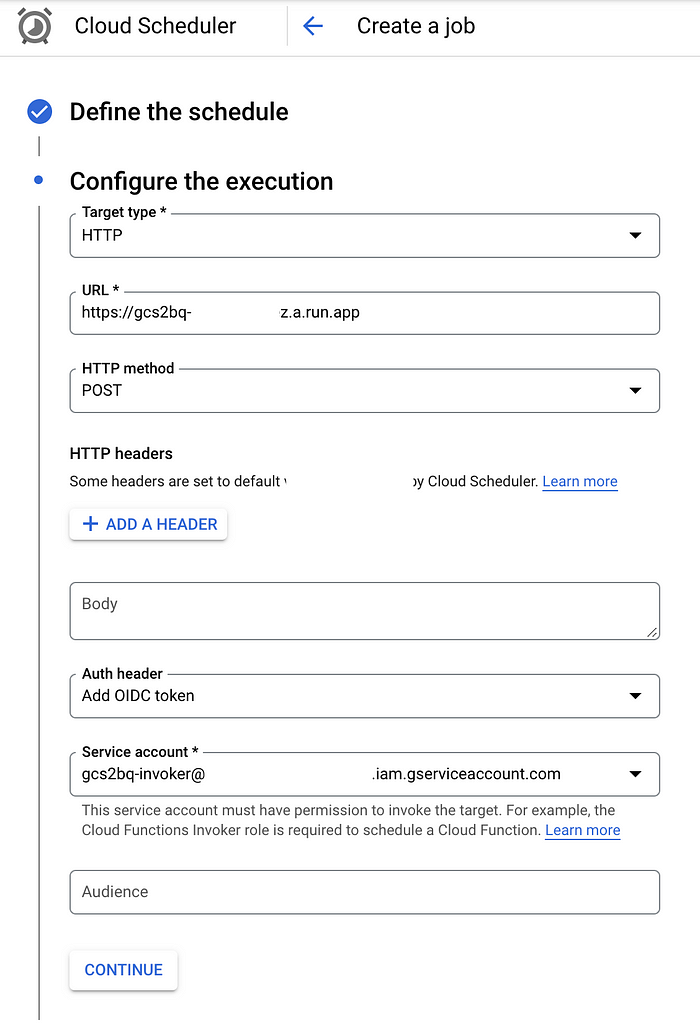

We could also schedule the execution via Cloud Scheduler (which allows us a maximum attempt deadline of 30 minutes):

Wrapping up

Future improvements we’ll probably want to consider:

- If only one instance of the command should be allowed to run at once, a locking mechanism (eg. via GCS) should be implemented

Thanks for reading!